A new paper from Microsoft Research titled “The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits” introduces a significant advancement in efficient large language model (LLM) design. The paper presents BitNet b1.58, a 1.58-bit LLM variant where every parameter is constrained to three possible values: -1, 0 or +1 (a ternary).

Key Achievements

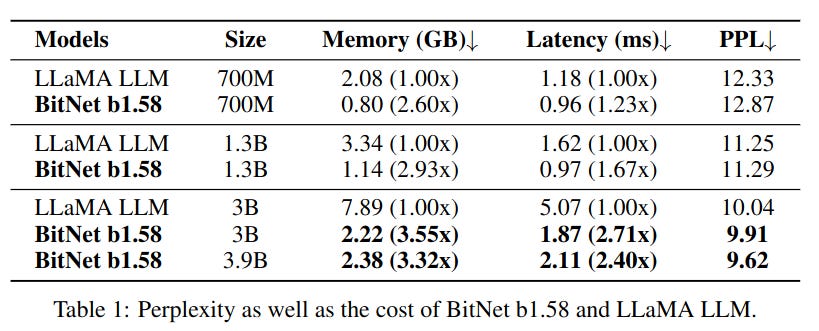

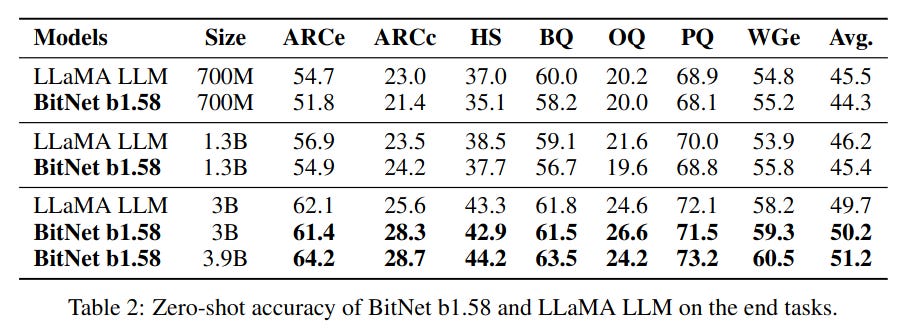

– Matches performance of 16-bit LLMs in terms of perplexity and zero-shot accuracy on downstream tasks, starting from 3B parameters. The 3.9B parameter BitNet b1.58 outperforms the 3B LLaMA LLM baseline.

– Reduces memory usage by up to 3.55x and latency by up to 2.71x compared to 16-bit LLaMA LLM baseline. Further gains observed at 7B and 70B model sizes.

– Estimated to reduce arithmetic operations energy consumption by 71.4x on 7nm chips.

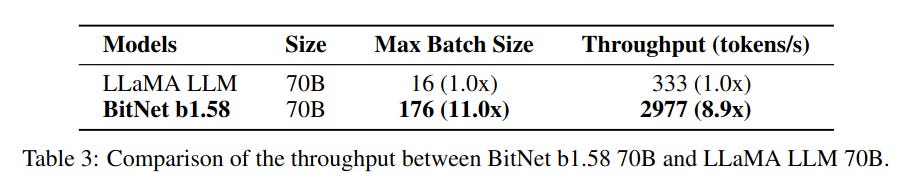

– Enables 8.9x higher throughput than the 70B LLaMA LLM baseline when deployed on two 80GB A100 GPUs.v

Implications

The constrained ternary parameterization of BitNet b1.58 enables a completely new computation paradigm for LLMs, with matrix multiplication requiring only integer addition instead of floating point operations. This could have profound implications:

– Paves the way for specialized hardware optimized for efficient 1-bit LLM inference.

– Allows deployment of larger LLMs on edge devices due to reduced memory and computational requirements.

– Mitigates environmental impact and economic costs associated with training and deploying massive models.

The results suggest a new scaling law – 13B, 30B and 70B BitNet b1.58 models are more efficient than the equivalent 3B, 7B and 13B FP16 models respectively. This demonstrates BitNet b1.58’s potential as a scalable and sustainable foundation for next-generation LLMs.

The performance achieved by BitNet b1.58 heralds the dawn of a new era of efficient yet accurate 1-bit large language models. Its constrained parameterization strikes an optimal balance between preserving model quality and minimizing resource utilization. As model sizes continue ballooning, techniques like BitNet b1.58 will be crucial for developing LLMs that are both high-quality and broadly accessible.