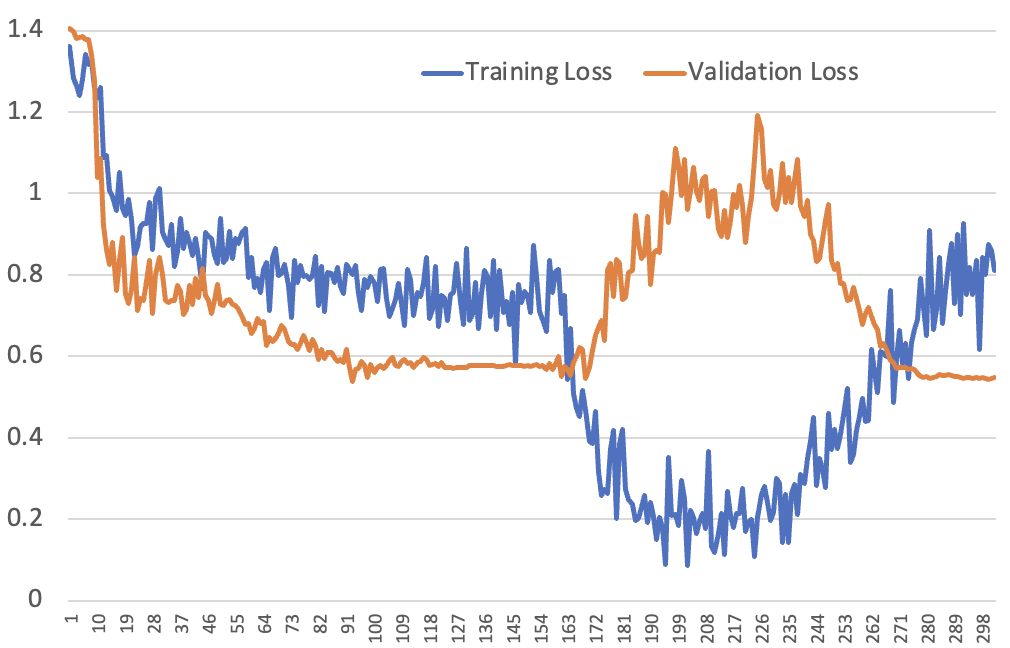

Recent experiments with large language models (LLMs) have shown that they can rapidly memorize examples from a dataset after seeing them just once, contradicting previous understanding of neural network sample efficiency. This discovery could potentially revolutionize how we train and use LLMs, but also raises concerns about the catastrophic forgetting problem and the effectiveness of data augmentation. Further research and experimentation are needed to fully understand and harness this phenomenon.

Read more at fast.ai…