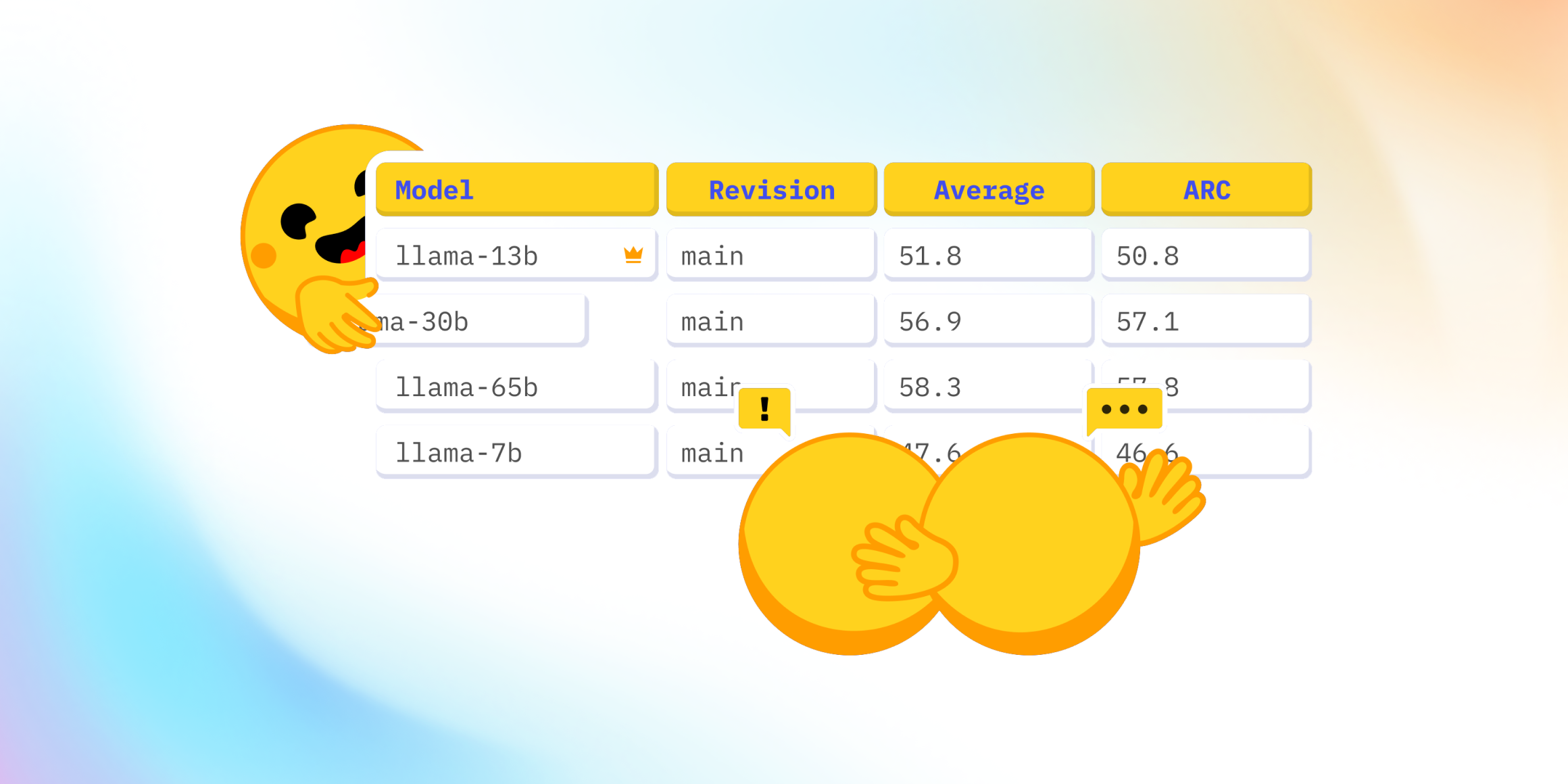

GPT-4: The Open LLM Leaderboard, which compares open access large language models, has sparked a discussion on the discrepancies in evaluation numbers for the LLaMA model. This article investigates the differences in MMLU evaluation implementations, including the Eleuther AI LM Evaluation Harness, the original UC Berkeley implementation, and Stanford’s CRFM evaluation benchmark. The findings reveal that different implementations yield varying results and rankings, emphasizing the importance of open, standardized, and reproducible benchmarks for comparing models and fostering research in the field.

Read more…