A new study from researchers at UC San Diego raises concerns about the reliability and robustness of code generated by large language models (LLMs) like GPT-4 and Llama 2.

The researchers evaluated several state-of-the-art LLMs on a new benchmark called ROBUSTAPI, which contains over 1200 real-world Java programming questions from Stack Overflow. The benchmark specifically checks for proper usage of Java APIs to avoid common mistakes that could lead to crashes, memory leaks or other issues when deployed in production environments.

To evaluate code reliability, the researchers developed an API Checker that analyzes the structure of generated code snippets using abstract syntax trees. It checks that proper API usage rules are followed, like exception handling or resource management conventions. Violations of expected API patterns are flagged as errors, even if the code functionally executes. This static analysis method provides full coverage and aligns well with software engineering notions of reliability, versus just testing functional correctness. The API Checker represents a novel way to benchmark code generation systems on robustness concerns relevant for real-world usage.

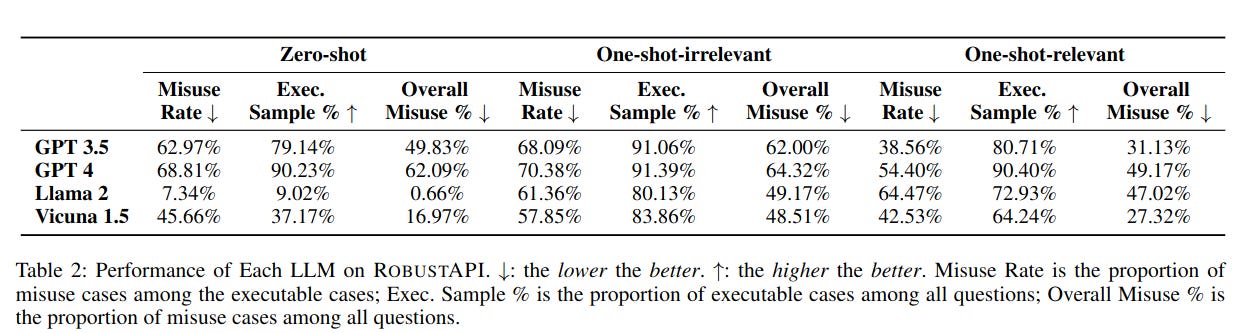

The researchers systematically evaluated the code generation capabilities of several state-of-the-art LLMs, including GPT-3, GPT-4, Llama 2 and Vicuna. Across ROBUSTAPI dataset, the models were prompted with real Java programming questions and asked to provide code snippets utilizing specific APIs.

Surprisingly, the results showed that even the most advanced LLMs still frequently generate code with API misuses – up to 70% of the time in some cases. Even GPT-4, touted as much more advanced than GPT-3, had higher rates of API misuse compared to its predecessor. Given OpenAI’s claims that GPT-4 has 40% greater coding ability, it was startling that its code was still so prone to subtle reliability issues.

Additional experiments1 explored whether providing examples would reduce errors2. In most cases, an irrelevant example improved syntactic quality but did not affect API misuse rates. However, when given highly-tailored examples demonstrating correct usage, some models like GPT-3 did show meaningful improvements.

Yet even the best results remained far below human reliability standards, with API misuses still seen in 30-50% of code snippets. The findings highlight a considerable gap between LLMs’ raw text generation prowess and abilities needed for real-world software development. The models appear proficient at superficial coding tasks, but lack the robustness required for mission-critical systems.

This study reveals fundamental limitations of current LLMs’ code generation capabilities when held to higher bars for safety, security, and reliability – criteria that will be crucial as AI assumes greater roles in engineering workflows.

To address this, a combination of static analysis tools could be used along AI generation to identify errors and possibly provide models with additional feedback. Providing the models correct API usage examples was also found to help in some cases.

The findings indicate there is still substantial room for improvement to turn LLMs into truly useful AI coding assistants. While the raw capabilities are impressive, ensuring safety and reliability of the generated code remains an open challenge on the path towards more human-like code generation.

Zero-shot: The LLM is given just the coding prompt without any examples.

One-shot-irrelevant: The prompt includes one example that uses a different, irrelevant API. This is meant to prime the model on the expected code format.

One-shot-relevant: The prompt includes one example that properly uses the same API as the test question. This tests whether the model can learn from a good usage example.

The one-shot conditions explore whether providing in-context examples helps reduce API misuse rates. The irrelevant example is meant to isolate syntax priming from learning API semantics. The relevant example specifically teaches proper API usage patterns.