If you care about getting detectors onto tiny devices without a thicket of post-processing hacks, YOLO26 is a breath of fresh air. After a run of ever-heavier releases (v8→v13 layering in attention, custom losses, more complex heads), this one trims the fat and optimizes for deployment first.

What actually changed

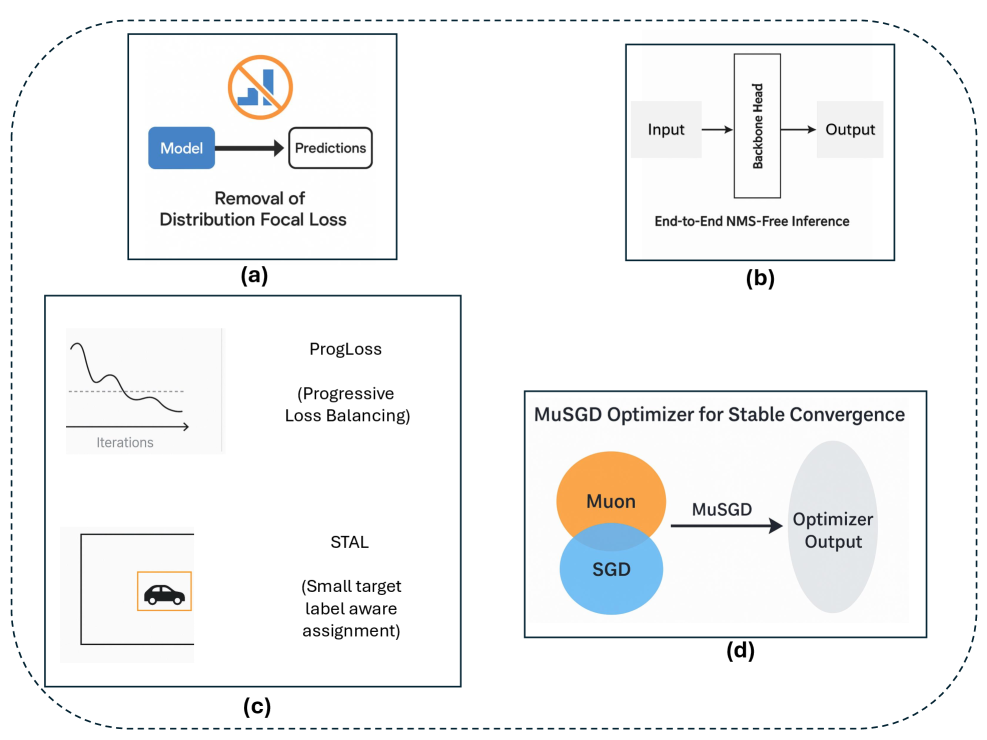

- DFL removed. Simpler head, cleaner exports, lower latency.

- No NMS. End-to-end predictions; fewer moving parts and no threshold hair-pulling.

- ProgLoss + STAL. Better stability and small-object pickup without bulky attention modules.

- MuSGD. Faster, steadier convergence in practice.

- Measured wins. Up to 43% faster CPU inference for the nano variant, with competitive accuracy on standard scales (e.g., ~51%+ mAP for “m”, ~53%+ for “l”) and lower latency than recent baselines.

Why this matters for shipping models

- Exports that just work. ONNX, TensorRT, CoreML, TFLite, OpenVINO—fewer edge-case ops and no DFL baggage mean smoother conversions.

- INT8/FP16 stay stable. The streamlined head tolerates low-precision math, keeping accuracy intact while shrinking memory/energy.

- Less glue code. Ditching NMS eliminates a common source of platform-specific bugs and perf cliffs.

- Predictable latency. Especially on CPU-bound paths and edge accelerators where every millisecond counts.

Perfect fits

- Jetson/Orin, Snapdragon, smart cams. Real-time without baby-sitting post-proc kernels.

- Robotics & drones. Lower end-to-end latency can be the difference between smooth control loops and jitter.

- Manufacturing QC. High throughput, tight SLAs, fewer moving parts in the stack.

- CPU-first deployments. When GPU is scarce or off the table.

Trade-offs (and why they’re sensible)

Yes, YOLO26 pares back architectural “sophistication.” But the payoff is tangible where it counts: simpler heads, portable graphs, no NMS thresholds to retune per device, and accuracy that holds up—especially on small targets thanks to ProgLoss + STAL.

A quick migration checklist

- Start with exports. Convert straight to ONNX → TensorRT/TFLite/OpenVINO; verify dynamic shapes and opsets without DFL detours.

- Quantize early. Calibrate INT8 on your domain data; check that small-object classes remain stable (they should).

- Trim the pipeline. Remove NMS code paths and any IoU-threshold configs wired into your serving tier.

- Benchmark end-to-end. Measure total frame latency (pre+infer+post), not just model time—you’ll feel the NMS removal here.

- Train with MuSGD. Expect fewer restarts and smoother curves; keep an eye on small-class recall to validate STAL/ProgLoss gains.

- Edge realism. Validate on your target clock/governor settings and thermals; the simplified head tends to degrade more gracefully.

Benchmarks at a glance

On common servers (TensorRT FP16) and edge devices, YOLO26 sits in a sweet spot: competitive mAP versus contemporary detectors like RT-DETR variants, with consistently lower latency. On CPUs, the nano model’s ~43% speedup over prior YOLO baselines is the standout for budget hardware. Jetson-class deployments benefit the most from the NMS-free path and quantization resilience.

Bottom line

If you’ve wrestled with exporting v12/v13 graphs, wrestled with NMS perf on ARM, or watched INT8 nuke small-object recall, YOLO26’s “simplify everything” ethos is the practical upgrade. It’s tuned for the realities of edge deployment—lean, portable, and fast—without giving up the accuracy you need.

Read more in the YOLO26 paper: https://arxiv.org/html/2509.25164v1.