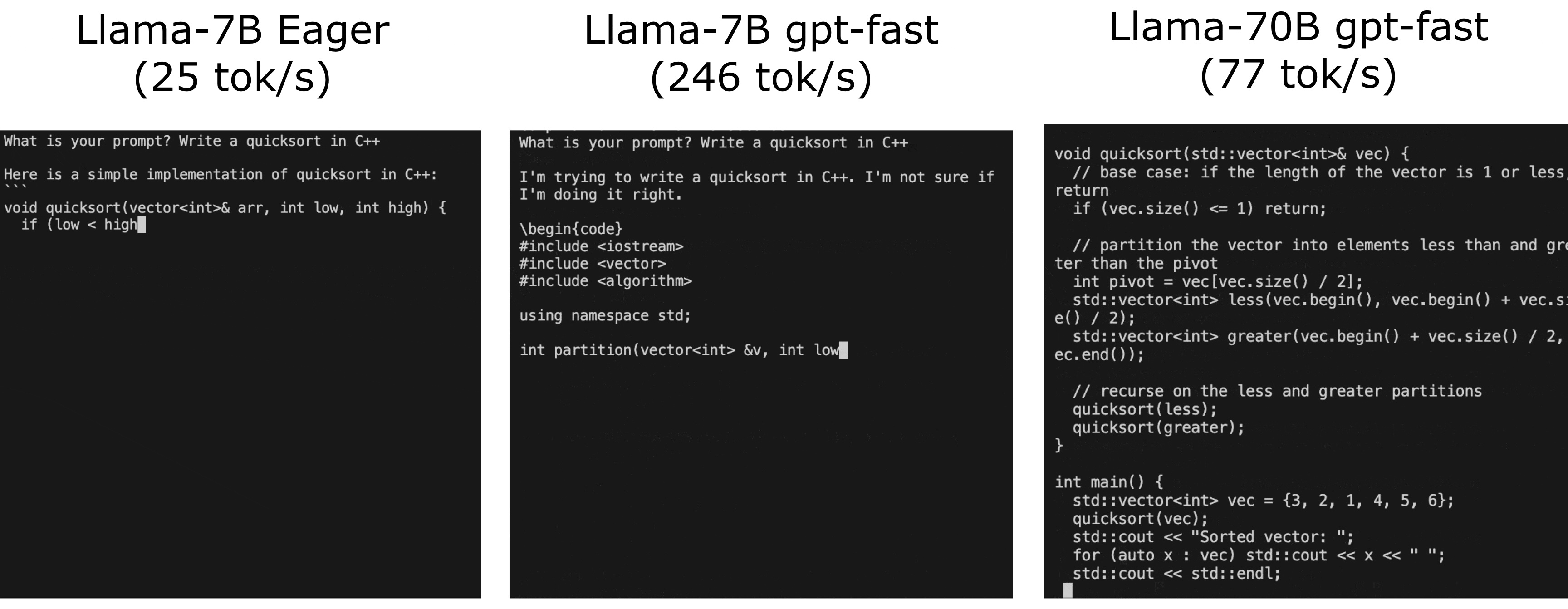

The PyTorch team has developed a Language Model (LLM) that runs almost 10x faster than the baseline, with no loss of accuracy, using native PyTorch optimizations. The team used techniques such as torch.compile, int8 weight-only quantization, speculative decoding, and int4 quantization to improve performance. The model can be further optimized using tensor parallelism across multiple GPUs. The code is available on GitHub for users to modify and adapt to their needs.